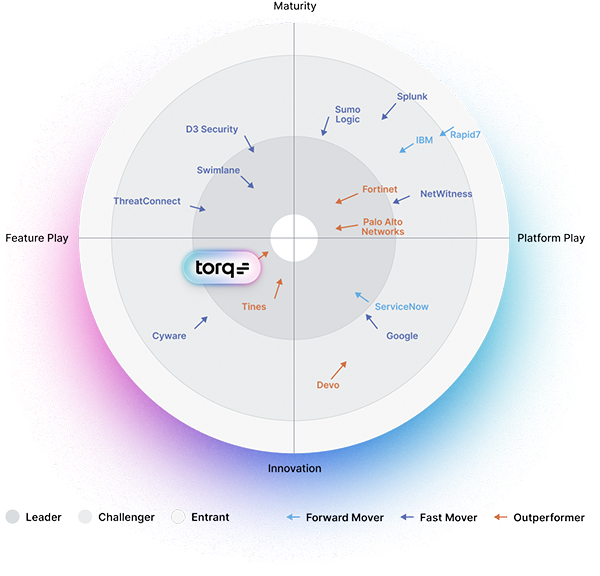

The Security

Hyperautomation Pioneer

The enterprise-grade, AI-driven hyperautomation platform that makes autonomous security operations a reality

Your Security Product’s Favorite Security Product

10X Faster ROI

Than Legacy SOAR

Create and deploy complex, sophisticated workflows in minutes

Enterprise Architecture

Cloud-native, multi-tenant, zero-trust architecture that scales with your needs

Connect to Everything

Hyperautomate every app, every stack, across cloud, on-premise, and hybrid environments

No-Code or Your Code

Go beyond APIs, with support for any CLI, platform, and programming or scripting language

San Francisco | May 6-9, 2024 | Booth #4415